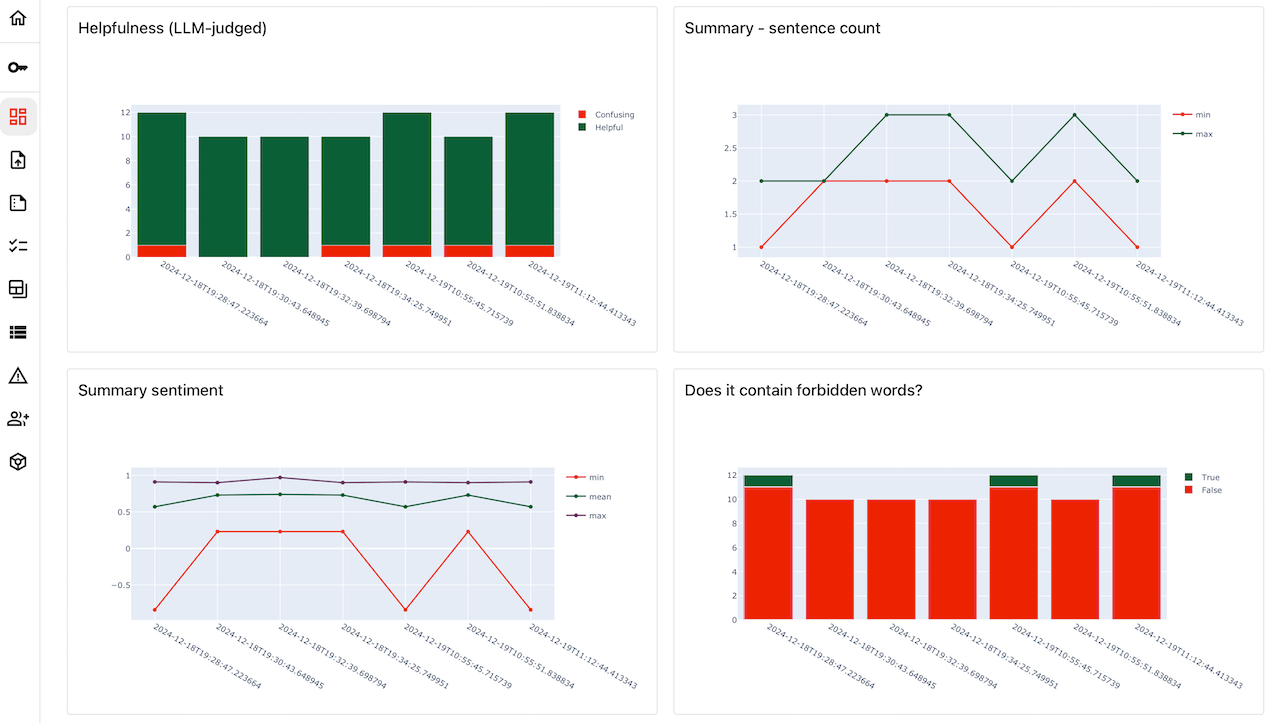

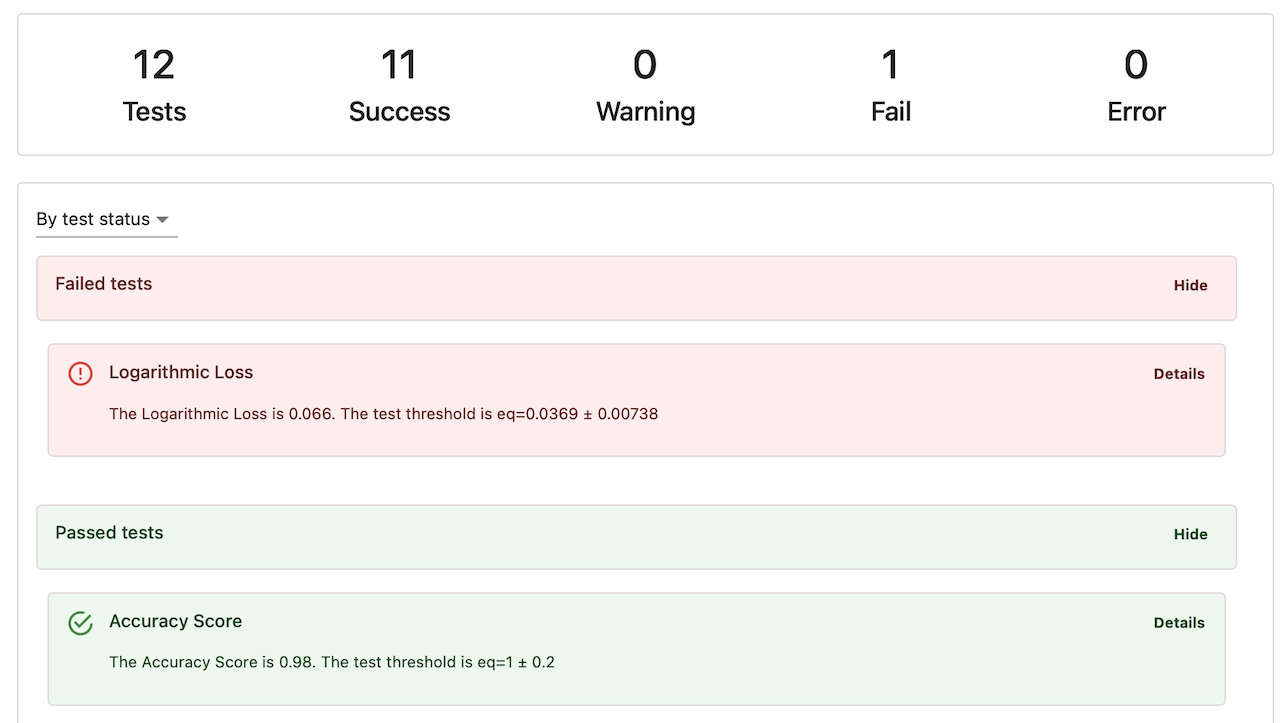

- Evidently is an open-source Python library with over 25 million downloads. It provides 100+ evaluation metrics, a declarative testing API, and a lightweight visual interface to explore the results.

- Evidently Cloud platform offers a complete toolkit for AI testing and observability. It includes tracing, synthetic data generation, dataset management, eval orchestration, alerting and a no-code interface for domain experts to collaborate on AI quality.

Get started

Run your first evaluation in a couple of minutes.LLM evaluation

Evaluate the quality of LLM system outputs.

ML monitoring

Test tabular data quality and data drift.

Feature overview

What you can do with Evidently.

Evidently Platform

Key features of the AI observability platform.

Evidently library

How the Python evaluation library works.